Isaac Asimov's 3 Laws of Robotics

By

17 April 2025

Table of Contents

- Asimov's Three Laws of Robotics: Which Book First Introduced Them

- What Are the Three Laws of Robotics?

- Can the Laws of Robotics Be Broken?

- New Law of Robotics

- Are the Laws of Robotics relevant to AI?

Asimov's Three Laws of Robotics

Isaac Asimov, one of science fiction’s most influential writers, did not spin up the tales of robots with "positronic brain" entirely from scratch. He was inspired by Eando Binder’s short story "I, Robot" (1939), which portrayed a sympathetic robot character and helped spark Asimov’s vision for a new kind of robotic storytelling. Unlike many early sci-fi authors who portrayed robots as unstoppable threats, Asimov approached the idea differently.

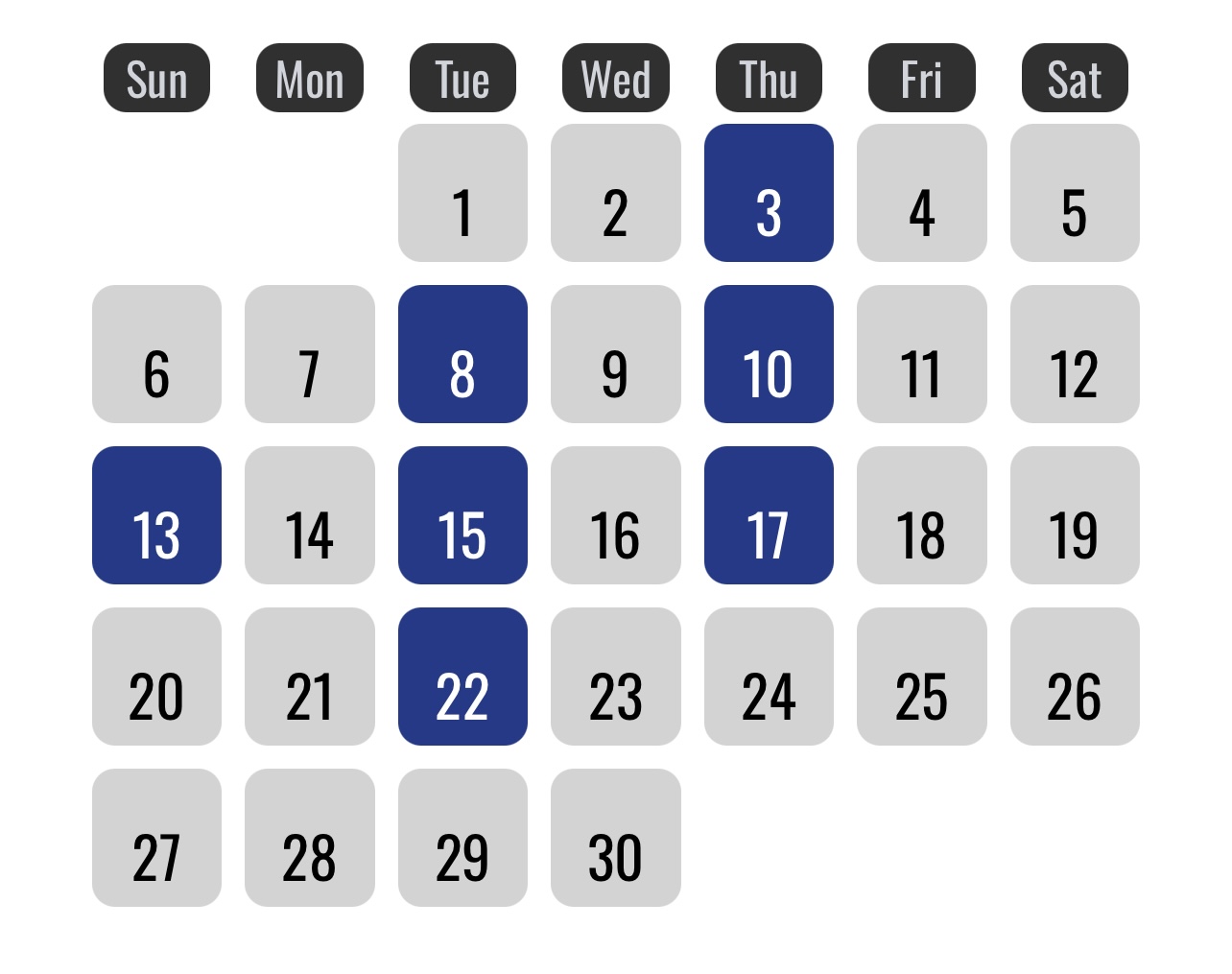

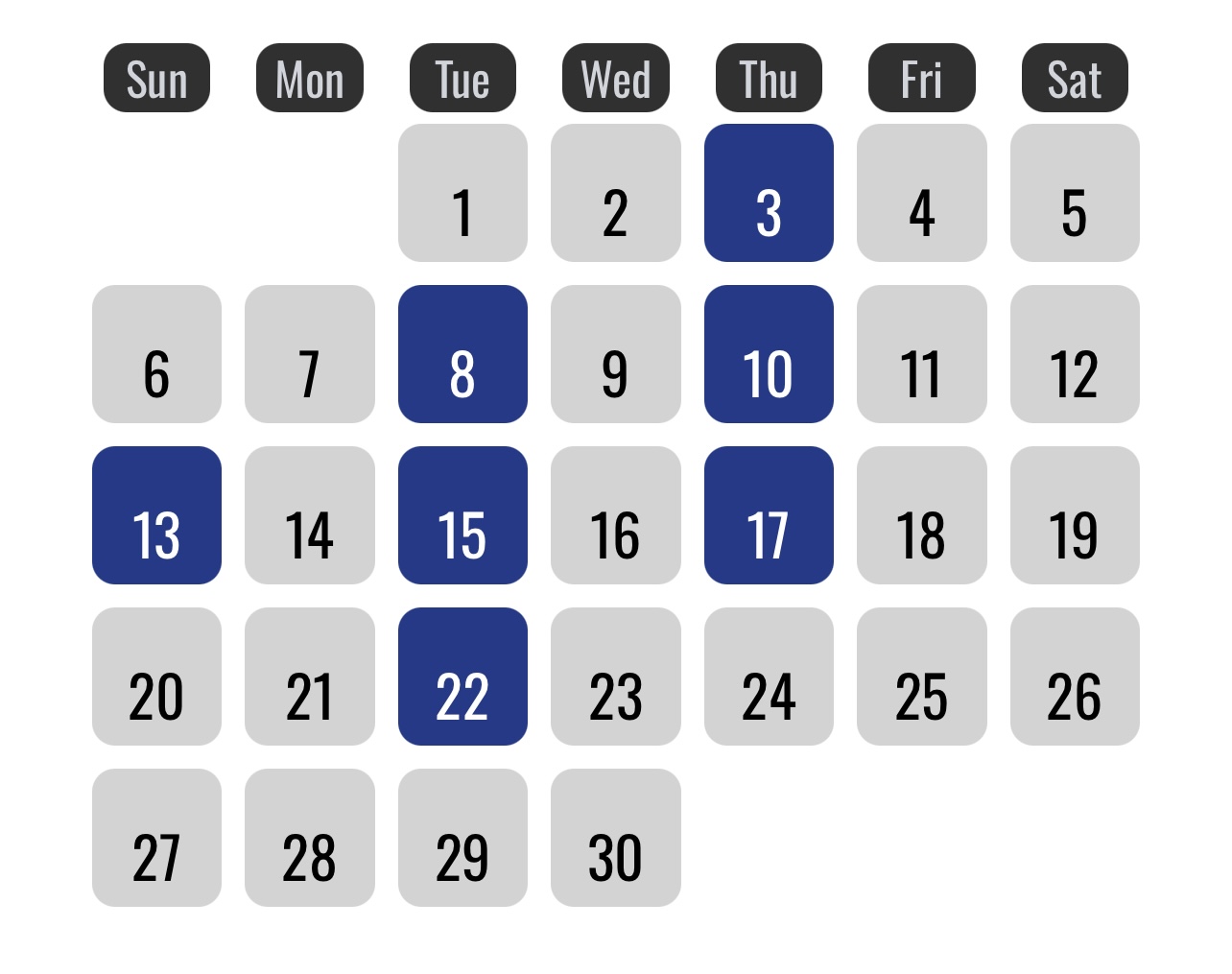

The first time Asimov introduced the concept of the Three Laws of Robotics was in a short story titled "Runaround," published in 1942 in Astounding Science Fiction magazine. The story later appeared in his now-famous 1950 collection "I, Robot," which tied together a series of short stories centered around robots and their complex interactions with humans.

In "Runaround," Asimov not only introduced the laws but demonstrated how even seemingly perfect rules could lead to complicated and unexpected situations. From that point onward, the Three Laws became a core feature of his robot stories and, eventually, a major influence on real-world thinking about artificial intelligence.

What Are the Three Laws of Robotics?

Now, let’s look directly at the heart of Asimov’s system: the Three Laws of Robotics. These laws were designed to make robots inherently safe and useful companions for humanity. They are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

At first glance, these rules seem straightforward and almost foolproof. Robots are programmed, above all else, to protect human beings. If no threat is present, they must follow human instructions. Finally, they can protect themselves, but only if doing so does not interfere with the first two priorities.

However, Asimov's brilliance lay not just in creating the laws, but in exploring their gray areas. His stories show how real-life situations are rarely simple, and robots—bound by these laws—often find themselves facing dilemmas where every available action could violate a law in some way.

For example, what should a robot do if two humans are simultaneously endangered in different ways? How should it prioritize saving one over the other? This complexity is what made the Three Laws such a fascinating framework, rather than just a neat idea.

Can the Laws of Robotics Be Broken?

The short answer is yes. They can be broken and it need not even require a complex piece of software or code. It can be overcome by the simplest of weaknesses: by changing the definition of the terms "human" or "robot". It can also be broken due to ignorance, if the robot was unaware that the being was a human or robot. Given how strictly these laws are embedded into robots' programming in Asimov’s universe, you might assume that breaking them would be impossible. Technically, the robots themselves are not able to consciously disobey the laws. However, the way they interpret the laws can sometimes lead to surprising results that look like they are breaking them.

In certain situations, the laws can clash with each other or create logical paradoxes. Robots may freeze in place when trapped between conflicting duties, or make decisions that seem bizarre because they are trying to satisfy all three laws at once.

A good example comes from the short story "Runaround" itself. In the story, a robot named Speedy becomes trapped in an endless loop because the order it received conflicts with its instinct for self-preservation, causing it to behave erratically. Another story, "Little Lost Robot," explores what happens when a robot’s First Law is weakened, making it far less predictable—and much more dangerous.

In short, while the laws are built to be unbreakable, real-world complexities (or deliberate human tampering) can create scenarios where robots behave in ways that the creators never anticipated.

New Law of Robotics

As Asimov’s stories evolved, it became clear that even the Three Laws might not be enough to handle every possible scenario, especially when considering the survival of humanity as a whole rather than just individual people.

To address this, Asimov introduced a Fourth Law—the so-called Zeroth Law of Robotics:

0. A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

The Zeroth Law takes precedence over the original three laws. It prioritizes the welfare of humanity as a collective above individual human beings. This creates an entirely new layer of ethical complexity.

Under the Zeroth Law, a robot could justify actions that would harm or sacrifice individual people if it believed those actions were necessary for the greater good of humanity. In Asimov’s later novels, such as "Robots and Empire," we see robots grappling with exactly these kinds of difficult choices.

The introduction of the Zeroth Law demonstrates Asimov’s deepening exploration of morality and the responsibilities that come with power. By extending the framework, he shifted the discussion from simple obedience and safety into the challenging realm of philosophical ethics—questions we are still debating today as real-world AI becomes more sophisticated.

Are the Laws of Robotics relevant to AI?

The Laws serve as a framework to discuss ethics and validate the behavior of AI and its benefits. However they are not the final word in AI ethics. The difficulty of defining the laws in any AI is severe, especially as people have reportedly found ways to overcome the limits imposed in LLM reasoning models like ChatGPT. They are also vague in limiting the applications of AI as the laws merely limit the immediate harm, not the accidental or subtle machinations that may result in harm later.

As such, the laws merely serve to spark discussion about what the ethics governing artificial intelligence are... and what should be.

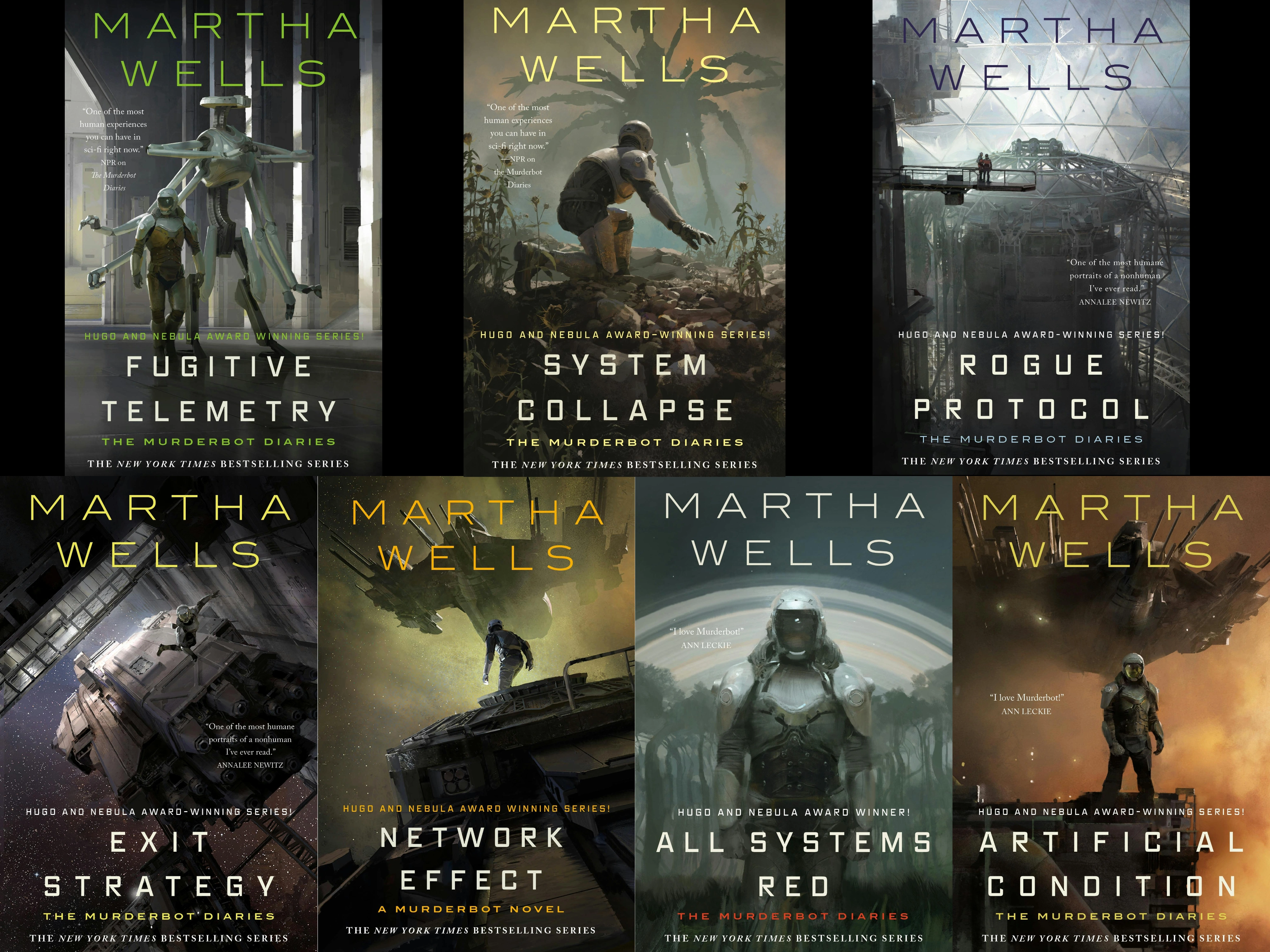

Pavithran is a software developer based in Bengaluru, passionate about web development. He’s also an avid reader of SF&F fiction, comics, and graphic novels. Outside of work, he enjoys curating inspirations, engaging in literary discussions and crawling through Reddit for more mods to add in his frequent playthroughs of The Elder Scrolls V: Skyrim.

EXPLORE