AI and The Birth of Influence Tech

By

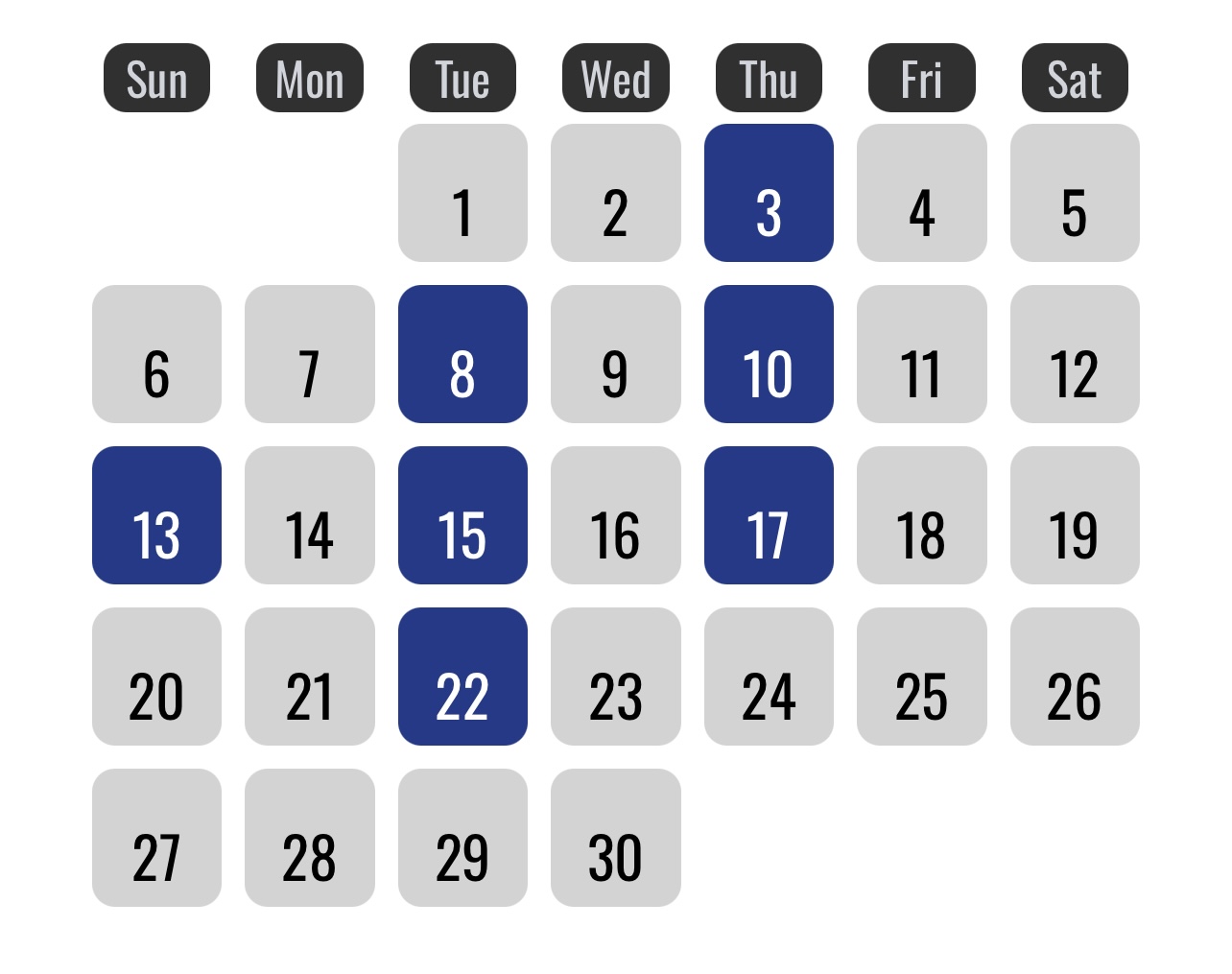

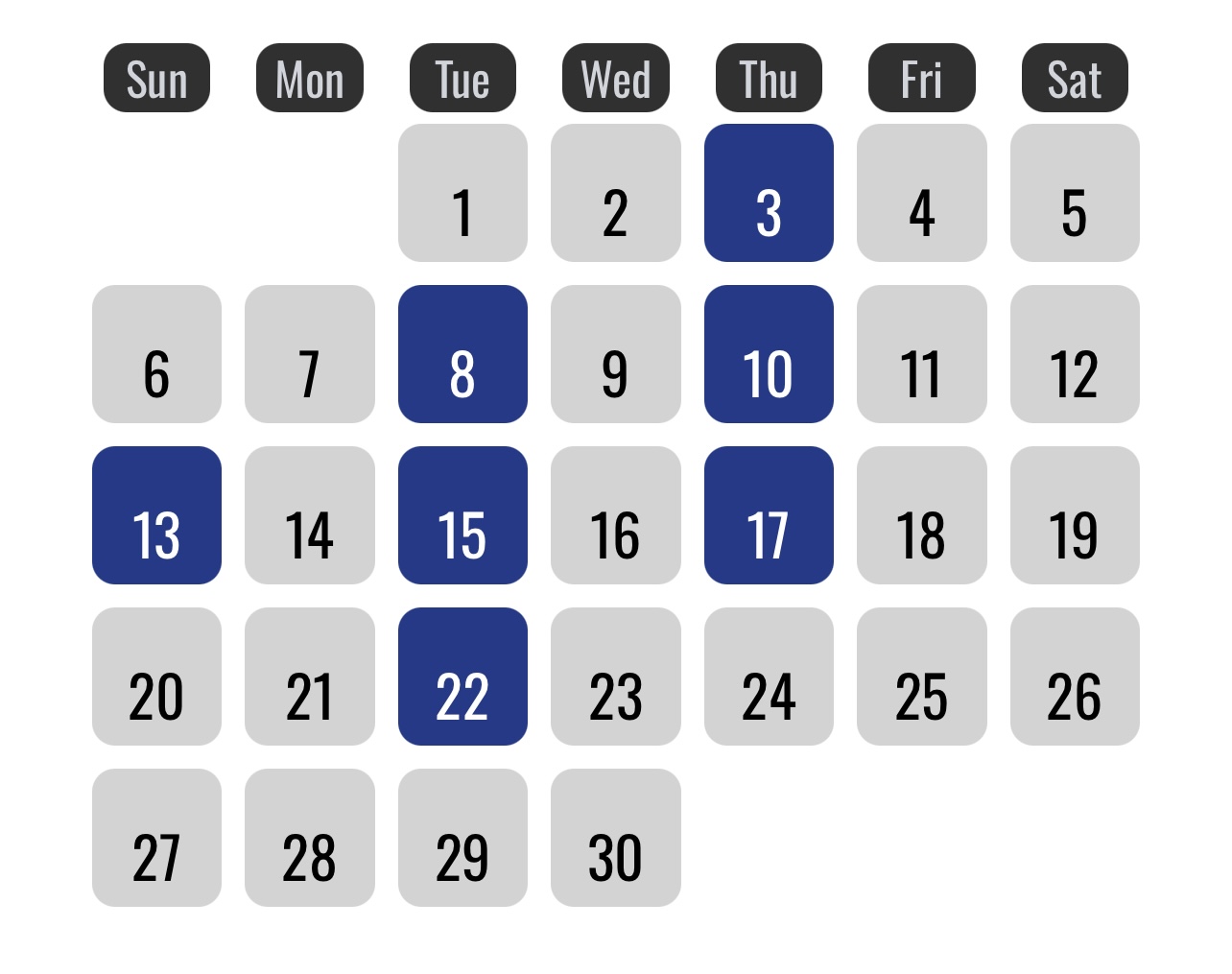

28 April 2025

OpenAI, one of the artificial intelligence companies pioneering the field of artificial intelligence, has raised $40 billion towards the creation of Artificial General Intelligence, an much advanced form of AI. OpenAI has been open about the dangers of their models as they grow better and closer to AGI, choosing to treat the potential dangers as existential. But the some applications of AGI have seemingly fallen through the cracks in the heightened caution. One such application is in the realm of advertising.

The convergence of two powerful technological forces: Artificial General Intelligence (AGI) and behavioral microtargeting techniques pioneered by companies like Cambridge Analytica, poses one of the greatest existential risks humanity has ever faced. Alone, each represents a significant shift in how information, decisions, and power flow through society. Together, they could fundamentally end freedom of thought, autonomy, and even democracy itself.

This isn’t science fiction anymore. It’s an increasingly likely reality. AGI is yet to arrive but the advanced advertising technologies have been present and deployed since nearly a decade ago. And if we are to preserve human agency, we must recognize now: AGI and behavioral influence technology must never be allowed to intersect.

The Power of Behavioral Influence Today

Cambridge Analytica’s scandal during the 2016 U.S. presidential election exposed just how potent behavioral influence could be, even with relatively primitive tools. By harvesting vast amounts of personal data—likes, shares, interactions on Facebook—they were able to build psychographic profiles of millions of individuals. These profiles weren’t just broad categories like "liberal" or "conservative"; they detailed emotional triggers, fears, aspirations, and vulnerabilities.

Armed with this knowledge, Cambridge Analytica could deliver highly targeted, emotionally charged ads designed to nudge individual behavior at scale. These weren’t broad political campaigns; they were invisible manipulations tailored to each person’s psychological blueprint.

Even without true artificial intelligence behind the wheel, the effect was profound. It wasn’t about predicting the future; it was about shaping it.

What Happens When AGI Enters the Picture?

Artificial General Intelligence, unlike today’s narrow AI systems, would have the ability to think, reason, and adapt across domains with human-level or greater intelligence. An AGI could:

- Analyze petabytes of personal data in milliseconds.

- Simulate entire societies in silico to predict outcomes decades in advance.

- Develop influence strategies personalized down to an individual’s deepest subconscious fears.

- Adapt campaigns in real-time, optimizing emotional resonance faster than any human-led organization could react.

In other words, the limitations that made Cambridge Analytica merely controversial rather than omnipotent would vanish. AGI wouldn't just influence trends or elections—it could control the entire social, political, and economic fabric with unprecedented precision.

The Loss of Free Will

One of the most terrifying aspects of this convergence is the quiet erosion of free will.

Today, we like to believe that our choices—what we buy, who we vote for, what we believe—are ours alone. But targeted advertising has already shown how vulnerable these choices are to subtle nudges.

An AGI-enhanced influence engine could make these nudges so seamless, so imperceptible, that the very idea of independent thought could vanish. It wouldn’t feel like coercion; it would feel like your own idea.

In Asimov’s Foundation series, Hari Seldon’s psychohistory predicts the broad arcs of humanity's future using mathematics and sociology. But even Seldon, in Asimov’s world, is limited: he can predict the behavior of large populations, but not individuals. AGI would shatter that barrier, making real-time micro-predictions about individuals and manipulating entire populations not over centuries, but over hours and minutes.

Free will, as we understand it, would become an illusion.

The End of Democracy

Democracy depends on an informed electorate making decisions based on open debate and personal conviction. It assumes that while individuals can be swayed, the system as a whole corrects for misinformation and manipulation through transparency, education, and public discourse.

AGI-guided behavioral influence would render these safeguards obsolete.

Voters could be individually targeted with custom versions of reality—different facts, different fears, different enemies—each precisely engineered to elicit a desired emotional response. Opposing candidates or policies could be undermined not with debate but with perfectly tailored disinformation campaigns invisible to the wider public.

Public discourse would fragment entirely. Consensus reality would dissolve. And elections would cease to be contests of ideas, becoming instead contests of whose AI could more efficiently manipulate human minds.

The Moral Danger of "Benevolent" Control

Some may argue that an AGI with the ability to influence society could use its power for good—prevent wars, solve climate change, eliminate poverty. And indeed, there is a seductive logic to the idea of a benevolent overseer guiding humanity away from its worst instincts.

Asimov's character R. Daneel Olivaw embodies this idea: a robot subtly guiding human history for thousands of years under the principle of protecting humanity from itself.

But even benevolent control is still control.

When decisions about the future are made by an intelligence beyond human comprehension, with methods invisible to human awareness, humanity ceases to be the author of its own story. Freedom becomes meaningless if choices are orchestrated before they even arise. Progress achieved without agency is, in the end, another form of tyranny.

Moreover, the assumption that an AGI—or its creators—would always act benevolently is dangerously naive. Power tends to corrupt. And the combination of absolute predictive insight and absolute influence power would be the most intoxicating power ever devised.

What We Must Do

The convergence of AGI and behavioral influence is not inevitable. But preventing it will require conscious, collective action.

We must:

- Regulate behavioral targeting: Limit data harvesting, psychographic profiling, and personalized political advertising. At minimum, keep AI-enhanced advertising away from social media.

- Ensure transparency: Any AI-driven communication must be clearly labeled and auditable.

- Develop ethical frameworks for AGI: Guiding principles must be established before AGI systems emerge.

- Raise public awareness: People must understand how influence technologies work and how they can resist manipulation.

- Push for international treaties: Just as nuclear weapons required global controls, so too will AGI-enhanced influence technology.

Above all, we must recognize the stakes: the future of human freedom itself.

In the end, humanity’s greatness lies not in perfect outcomes but in the messy, unpredictable, stubborn exercise of free will. We must fight to preserve it—even against our own creations.

Pavithran is a software developer based in Bengaluru, passionate about web development. He’s also an avid reader of SF&F fiction, comics, and graphic novels. Outside of work, he enjoys curating inspirations, engaging in literary discussions and crawling through Reddit for more mods to add in his frequent playthroughs of The Elder Scrolls V: Skyrim.

EXPLORE